Apache Kafka streaming pipelines are popular for collecting, storing, and moving volumetric data. Multi-cloud applications are becoming more common and mission-critical. However, most multi-cloud environments start their lives using the Internet for interconnectivity, with no latency guarantees.

For highly transactional systems like Kafka, our thesis is that latency matters more than raw bandwidth and has a real impact on performance in a multi-cloud deployment. In this blog, we’ll show how we tested this thesis by comparing the throughput of Kafka stream processing between AWS and Azure, when connected via the Internet versus a private network service.

Defining terms

A quick overview of Kafka and the test scenario:

Briefly, Kafka’s service is provided by brokers. The brokers manage topics. A topic is analogous to a mailbox. Producers send messages (mail), in this case in collections of batches. These concepts are covered in detail in Apache Kafka documentation (Main Concepts and Terminology) at https://kafka.apache.org/documentation/.

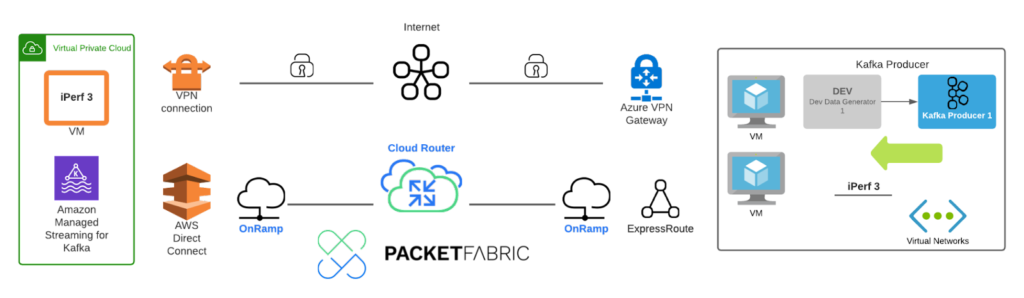

In our test, we measured comparative AWS-Azure throughput (Producer in Azure and Broker/Client in AWS) when connected via:

- Case1: Internet with site-to-site VPN

- Case2: PacketFabric Virtual Cloud Router

In Case1, site-to-site VPNs are encrypted tunneled connections over the public Internet. There are minor differences in both terminology (types of gateways) and capability (number of tunnels and expected throughput) between Azure and AWS VPN implementations. These were normalized in our test configuration to ensure a 1Gbps expected throughput.

In Case2, in PacketFabric we provisioned a 1Gbps EVPL circuit (with routing provided by our Virtual Cloud Router product) on our a private, secure and performant network service alternative to the Internet for connecting multi-cloud instances. PacketFabric’s network does offer latency guarantees (SLAs).

The measure of performance for this application is how fast the Producer can stuff the mailbox or how many “batches per second” can be delivered over an extended period of time, in each test case.

Test topology

To facilitate the test, the following services for both the VPN and private connectivity (via PacketFabric) cases were used:

Comparing the performance results

Network latency comparison

A long-running ICMP Echo (“ping”) provides some high-level statistics to set the stage on relative performance of the two cases we tested.

| Statistic (ms) | VPN | PacketFabric Virtual Cloud Router |

| RTT min | 70.8 | 62.1 |

| RTT avg | 132.9 | 62.5 |

| RTT max | 193.2 | 68.1 |

| RTT max deviation | 25.1 | 0.7 |

Pipeline throughput comparison

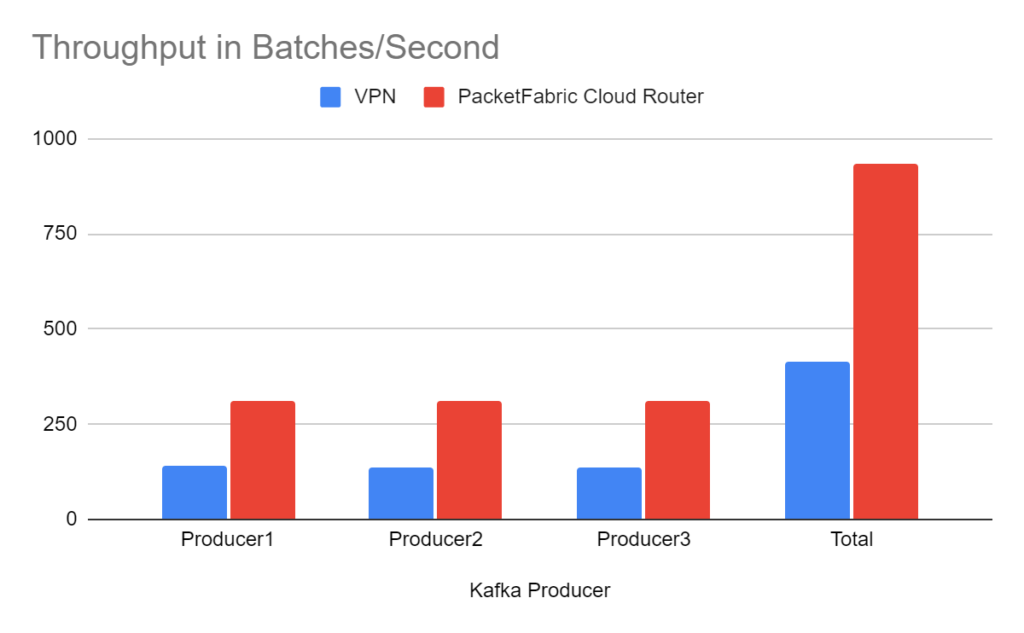

We configured the Kafka producer to create a batch of random record data. This is added to a Kafka topic as a message, from Azure to AWS.

Iperf3 was used to create “background” noise, but we kept it below the 1Gbps PacketFabric circuit speed, and below the reported 1.25Gbps IPSec VPN capabilities for site-to-site VPN.

By measuring “batches per second” between the two different networks, the impacts of variations in latency are observed. As you can see in the results below, PacketFabric’s Virtual Cloud Router, with a 1G connection, outperformed the Site-to-Site VPN by 225%.

| VPN | PacketFabric Virtual Cloud Router | |

| Producer1 | ||

| Producer2 | ||

| Producer3 |

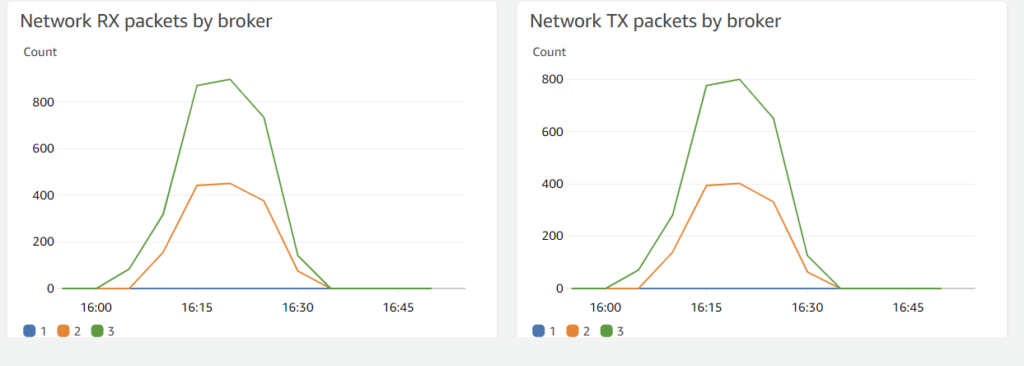

Confirmation with System Monitors

AWS MSK Monitoring

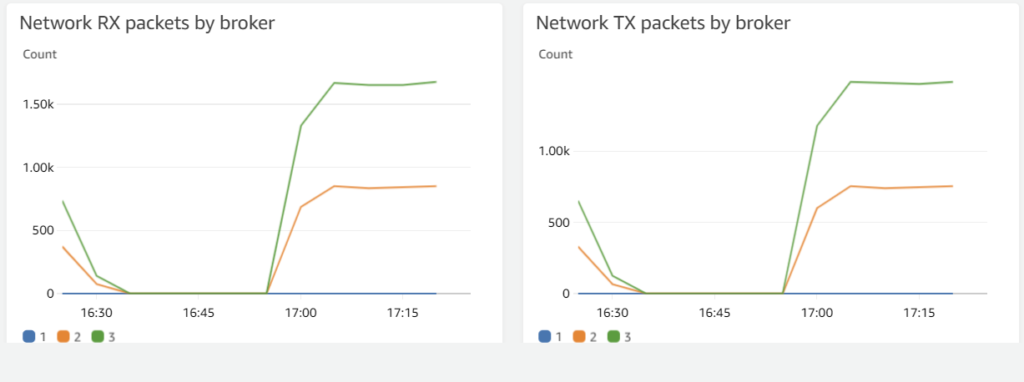

AWS MSK includes instrumentation regarding utilization and performance. Comparing the RX/TX packets received by the brokers from the Kafka producer in Azure reveals the impact of latency in terms of packet-per-second throughput.

The PacketFabric Virtual Cloud Router connection provides approximately 2x the throughput in packets.

Links which could be observed were never run at capacity, ensuring no link queueing.

VPN Results

PacketFabric Virtual Cloud Router Results

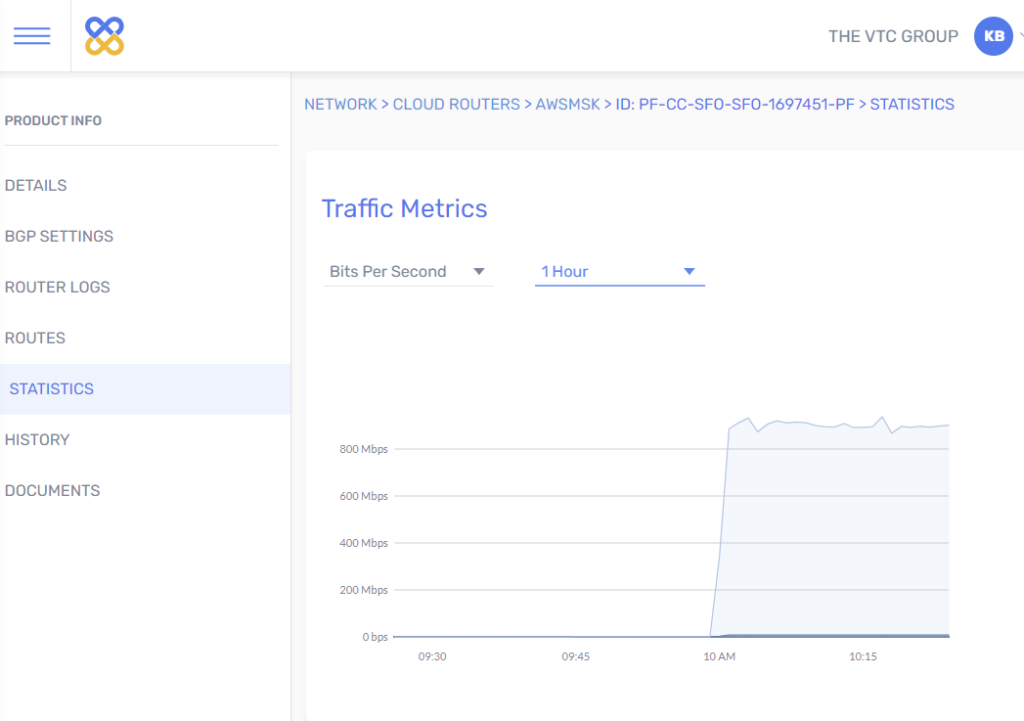

PacketFabric Virtual Cloud Router Traffic Metrics

As can be observed from the PacketFabric Portal, throughput on the circuit does not reach the 1Gbps capacity for the connection.

800Mbps of background traffic is generated via iPerf, with approximately 150Mbps of Kafka producer transactions providing the rest of the total traffic.

Therefore a total of ~950 Mbps of combined traffic, below the bandwidth capabilities of both VPN connections (gateway bandwidth varies slightly between Azure and AWS) and the PacketFabric CloudRouter connection.

Conclusion: Private Connectivity Matters

As we noted at the outset, it’s exceedingly common to start your cloud connectivity journey with the Internet. But if you’re working on volumetric and/or mission-criticality, you need the performance, SLA-backed reliability and scalability of private connectivity. However, that doesn’t mean you should settle for the long provisioning times and inflexible contracts of traditional telco services. And what if you don’t have a data center to route through, and/or don’t want to spend latency and cost on an unnecessary routing hop just to connect cloud regions or providers together? This is precisely the need that PacketFabric Virtual Cloud Router is designed to fill. Learn more about Virtual Cloud Router and other PacketFabric services, or if you’re ready to connect, request a demo.