Multi-cloud has now mainstreamed and the trades from CIO to NetworkWorld have added “Multi” to their “Hybrid” cloud advice sheets to help the C-suite keep up with the times.

Just about all of them point out (in one way or another) that WAN network intelligence is critical to the future multi-cloud experience, and I couldn’t agree more. Where our opinions might diverge is in the nature of the network intelligence “challenge” and the solution when constructing networks for multi-cloud workflows.

For starters, network data collection, correlation, causality, analytics, machine-learning aka “intelligence” has ALWAYS been a problem. The architectural construct of the internet that each router/switch/appliance/device-that-processes-packets makes autonomous routing and forwarding decisions immediately creates a “distributed computing” problem. The sheer volume of data from the devices across the optical, ethernet, IP and virtualization layers means it’s a “Big Data” problem. The fact that data is generated almost free-from across vendors, OS’, software IT systems, makes this an “unstructured data” problem. The lack of context on the data that is affecting one device/system/OS/subsystem/IT-stack and how it all interacts means you need a rule-based engine (in the past decade) or more recently an “AI-ML” focused solution. That’s almost all the buzzwords of data science just to run a network. Therefore, the perennial operational questions asked by all IT administrators: “how can I tell what’s going on,” “is it running correctly,” “is it configured correctly,” “whiskey tango foxtrot is going on” or, “what broke.” Regardless of what your networking construct might be it’s hard if not impossible to answer these basic questions with the patchwork quilt of tools available.

With that said, it’s interesting that anyone in the “get to cloud” tool or controller industry believes they have complete network intelligence. Inside the cloud, many can monitor the VPC itself, including data and storage I/O. But when it comes to network intelligence outside the VPC, they tend to hit limitations; some only work in certain clouds either because of software compatibility (the cloud gateway endpoint only runs in certain clouds), or require some level of hardware integration that is vendor or cloud specific – like controller based-solutions that pull data out of smartnics, proprietary interfaces that are not available across all clouds or reliance on intervening hardware or appliances to be able to imbed telemetry or flow context. Almost universally, none of the tools can solve the “outside the VPC” network intelligence problem: correlating a VPC to a virtual overlay to the actual underlay network. Certainly none or even combinations of tools see the full network.

Almost all of the solutions born in the early days of hybrid cloud assume the connectivity was primarily between the Enterprise and a single cloud – hub and spoke networking with bonus points for plates of tunnel spaghetti over some set of Internet service providers. “Ships in the night” overlays and underlays. More than one cloud meant more than one such overlay. Think of an enterprise hub-and-spoke going to the clouds/DCOs.

Anyone who has worked in the past with tunnels knows that overlays make answering those IT operational questions a whole lot tougher. Any answers come much more easily when you can make simplifying assumptions. In these early solutions, the assumption was that there was “probably” only one network provider between the endpoint and the target cloud and that cloud access point was “probably” at the edge of the same metro as the Enterprise endpoint. And maybe even the enterprise hardware was from the same vendor as the “controller.” Some even provided optimizations that tried to “make it so”.

With these assumptions in place, there can be some “divination” of the in-between network operational state. Most of the old goats of networking have experience in building “thumper” and “path trace” type applications – these are not new, but “divination” by pinging and tracing is a poor substitute for a complete network telemetric view (the look into the intermediary optical and routing devices, the link performance of the intervening connections) that incorporates the underlay.

Divination in such an overlay can’t do much if there is a “soft” problem in that provider access network, peering points or cloud onramp point (for example, your quality of service was degraded due to congestion) other than try another metro in hopes the congestion was at the cloud provider on-ramp location (dual tunnels, hot tunnel routing, active-active tunnels etc). You simply had very little view or control of the underlay reality. The network operating equivalent of hoping the answer was behind door number 1.

When things broke “hard” you could potentially troubleshoot based on those simplifying assumptions and inferences between the endpoints of your network overlay. You might be able to intuit what broke and who was responsible – as long as your design assumptions remained true. But, for reasons ranging from temporary outages to permanent economic realities (there wasn’t a public cloud onramp for a specific cloud in your metro), there were often less optimal paths in use that made troubleshooting much more cumbersome. It just wasn’t possible to get network data collected, correlated and analyzed to be able to fix or improve application performance or experience problems.

For these reasons, Enterprises that really cared about cloud performance started to adopt direct connection to their cloud provider. In its earliest stages, that adoption was fostered by exploiting prioritized or geo specific peering through their telco/SP network provider. But, from a bandwidth, latency and jitter point of view: the Internet is called a swamp for a reason. You can’t program the existing internet to deliver the services you want from it nor can you do anything about the rest of the traffic on the Internet affecting you. So, this work-around was quickly eclipsed by private connections either direct or through third-party partners of the cloud provider. This made operation more deterministic, but still often forced the architecture to “hub” using their corporate network through either the Enterprise in a colocation/on-ramp location. At best, you had less tunnel spaghetti and a bit more visibility (depending on your provider). At worst, you had substituted multiple overlays from multiple hubs if you were using multiple clouds or, a hybrid of a little bit of every solution.

When you move to multi-cloud whether controller-based overlay technologies or piecemeal DCI solutions with cloud-based network intelligence programs are attempted; they can never substitute for having a programmable, engineered network on a private fabric where you get what you program what you want from the network and, you pay for what you use. The wheels of overlays and piecemeal hub and spoke building models simply come off when your traffic starts going cloud to cloud. This is what the multi-vendor, unnecessarily complex market is presenting to an IT professional.

In an optimal multi-cloud architecture, your cloud resources should talk directly to each other over an engineered path. This means not transiting a hub construct (either at the Enterprise or a transit device in a select cloud), unless you want to sacrifice performance for visibility or sacrifice cost for control.

You need a fabric that integrates both underlay and overlay for this type of connectivity. Otherwise, who or how you transit at any particular time for any particular cloud-to-cloud connection will be a crapshoot and jitter, latency and true bandwidth guarantees as well.

Our fabric is made operational to our multi-cloud customers through Virtual Cloud Router – an intelligent, distributed routing instance per customer that enables cloud to cloud resource connections over our private highly available all-fiber network. Up to 100Gbps.

We are not a tunnel overlay over a third party (or collection of third party) network(s). We don’t provide a piecemeal solution with additional constructs you have to manage in different clouds (and pay for). We ARE the intervening network (a private network and on-ramp connectivity architecture) as well as all the devices providing your network abstraction (routing and virtual circuits on that infrastructure). We understand and can honor your “intent” through our network – not just at a shared onramp connection. We always find the shortest path between your clouds. Of course, we support segmentation for your security – that’s not a miracle but a basic construct of our network architecture.

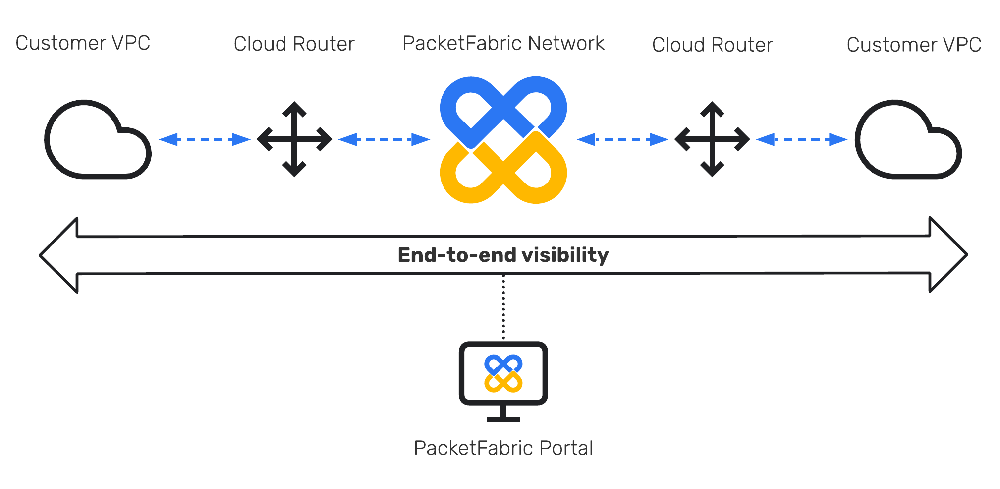

Virtual Cloud Router provides true WAN network intelligence through an operational monitor with end to end visibility and control for your multi-cloud WAN deployment.

Figure 1 PacketFabric provides full end-to-end network performance visibility for multi-cloud connectivity – to the cloud, between the clouds and to the VPC.

Because we offer cloud on-ramp, true converged fabric networking as an operator and are open when it comes to working with multiple clouds and their VPC APIs, PacketFabric isn’t hampered by the product limitations of others. We can keep stats on the connectivity to the cloud, between clouds and to the VPC.

This means you (and your AIOps tools) have API’s to access all that data. And, because we handle all the device, service, feature integration we can get to more data and thus, more insights than most vendors and third party data engines. No other product off the shelf can deliver this much visibility with such accuracy, precision and breadth of data sources across all clouds.

An overlay over a public network is a black box. You can only thump the box and attempt to divine what’s going on. You can do nothing about the performance of the intervening network. You can’t mitigate failures with any real precision.

Piecemeal DCI and DIA solutions never coalesce to operate like a common, integrated fabric.

So, while the cloud pundits and I are in complete agreement about the essential nature of WAN network intelligence to multi-cloud operation; I’m trying to figure out how the industry is going to reach the goal with an overlay product or a piecemeal solution that doesn’t have access to an underlay API to generate the full picture. The Internet is built in a layered architecture but, the shortcoming of the industry is the belief that the layers are independent. To understand the relationship between these layers and the dependencies therein; giving connectivity across all of them and visibility of each and the interdependence is the goal of our Virtual Cloud Router and Fabric.